Machine Learning Software Testing: how to develop and test machine learning scripts for iOS

Image, text and speech recognition, stock prediction, disease detection, error reduction, human extension - the field of machine learning is wide and vast. While it has been around since the fifties, we are reaching the point when is taking part in our lives. It is transforming into something more routinely, growing all around us without giving notice. This seems to come out of science fiction but is real and with the current technological advances goes with us all the time. Just think about your smart mobile device: it knows the music you prefer, tells you the best routes to go home, listens to voice commands, orders images by the people and places in them. It reads and helps you to write messages, texts or emails. Not only confirmed by that, it connects with a smart car that drives itself to the airport for us to take an airplane, almost completely controlled by machines, to go on a long vacation trip to our favorite place to relax and think of something else.

It takes time and training for a machine to learn how to be useful or achieve a simple human task. That is one of the principal reasons the progress has been slow so far but that is changing remarkably fast, specifically with mobile devices like the iPads and iPhones. The hardware architectures are getting more impressive and the work that 50 years ago took months to do in top computers now takes hours or even minutes to accomplish. Apple is aware of this and is releasing tools for us developers to take advantage of this and create new and impressive experiences.  Since iOS 10 hit the market we were able to get our hands over Siri speech recognition, a sound type machine learning algorithm in which the device records some audio and then classifies it into text. That text can latter be processed in detail to get more out of it, much in the same way in which Siri gives some sense to it and tells you a joke. Based in how a human brain work, a neural network consists of a net of interconnected neurons that process electrical signals which create some meaning. For example, my friend José says -hola- and my eardrum will transform the vibrations in the air and will send signals to the brain that will be processed and generate the thought that he is greeting me.

Since iOS 10 hit the market we were able to get our hands over Siri speech recognition, a sound type machine learning algorithm in which the device records some audio and then classifies it into text. That text can latter be processed in detail to get more out of it, much in the same way in which Siri gives some sense to it and tells you a joke. Based in how a human brain work, a neural network consists of a net of interconnected neurons that process electrical signals which create some meaning. For example, my friend José says -hola- and my eardrum will transform the vibrations in the air and will send signals to the brain that will be processed and generate the thought that he is greeting me.

What it appears to be a simple task, requires me to already know a few things that I learned with time, like understand what a word means or how it is pronounced in another language. In a similar manner machines need to do the same. They need to create connections between sounds, words and languages and they do that through a series of mathematical operations to each neuron and the connections between them. We use weights to measure the relation between neurons and we use biases to learn from the error they generate while interacting.

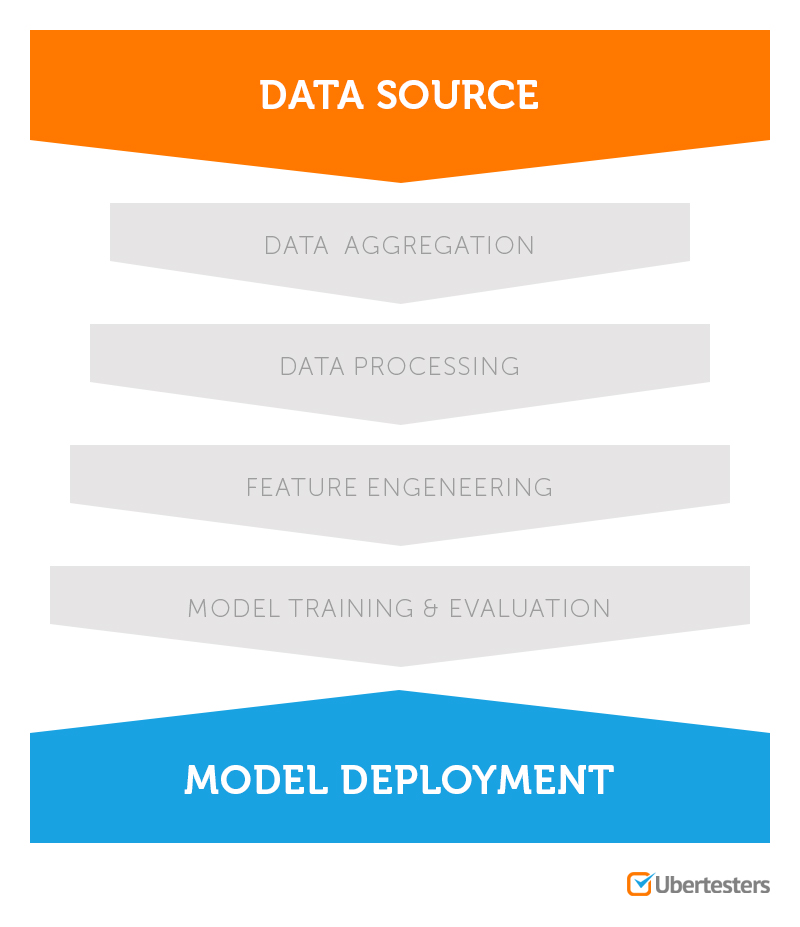

The machine learning process is made in an operation called gradient descent that what it does is to approximate an input to an expected output by measuring the error in its prediction. The input could be an image of a dog and the output could be any type of animal, the gradient descent process will iterate a number of times and with each iteration will be improving the result, bird, snake, horse, cat, dog. By this time Apple has released 2 new APIs, BNNS and metal CNN to create neural networks, both provide us with operations mostly focused to process images through convolution. They are limited to infer knowledge by using pre-trained models and not to train new ones, an advantage that machines have is that they can transfer their knowledge, weights and biases, in the same way you copy and paste a file. If you pre-trained a machine with hundreds of thousands of images to distinguish a variety of objects you can transfer that knowledge to a smartphone and use it. The result would not be as accurate but would be good enough to do the job with the interpretation of your data; You can still try to do your own backpropagation and gradient descent operations to reduce the error if you want your application to learn something but it will be computationally costly and not very efficient. I am pretty sure apple is already working on some solutions to this but in the meantime, we can only wait or do it ourselves.

Read also: The most popular smartphones screen values for mobile application testing.

The first API, BNNS, stands for Basic Neural Network Subroutines, is optimized to take full advantage of the CPU. It has a series of activation functions and network filters. The activations are made to calculate the value of each neuron through linear, sigmoid, tanhs, abs activation functions and filters to process fragments of images to extract features. The most common operations for doing this and are the ones provided in the API are max pooling and average pooling which calculate the max or the average values in groups of image pixels that create what the machine understands as a feature.  In a very similar way the second API (Metal Performance Shaders CNN or Convolutional Network), has some variations, but the most important difference is the use of the GPU over the CPU to increase the performance of the network. This is because the data is transformed into vectors. Vector operations are more efficient using GPUs. With both APIs you can accomplish similar tasks but metal is optimized for real time computer vision so it will depend, does it need to process images from the camera in real time? Then use metal or, it will take an image from your library to do something with it? You can use BNN, It will be up to you and your project, interpret numbers or text from pictures of handwritten stuff. It can create a separate folder for your images, estimate the change in the Apple stock trough a year, generate random jokes or poems, and possibly generate a new song. Only time will tell what we can accomplish but we sure are living in interesting times and is becoming an exciting experience to do and create all kinds of stuff.

In a very similar way the second API (Metal Performance Shaders CNN or Convolutional Network), has some variations, but the most important difference is the use of the GPU over the CPU to increase the performance of the network. This is because the data is transformed into vectors. Vector operations are more efficient using GPUs. With both APIs you can accomplish similar tasks but metal is optimized for real time computer vision so it will depend, does it need to process images from the camera in real time? Then use metal or, it will take an image from your library to do something with it? You can use BNN, It will be up to you and your project, interpret numbers or text from pictures of handwritten stuff. It can create a separate folder for your images, estimate the change in the Apple stock trough a year, generate random jokes or poems, and possibly generate a new song. Only time will tell what we can accomplish but we sure are living in interesting times and is becoming an exciting experience to do and create all kinds of stuff.

About Author:

Guillermo Irigoyen, iOS Computer Systems Analyst en AT&T, CTO and Co-Founder at Darc Data. Guillermo has 8 years of experience in software development, started as a multimedia artist, focussing in the building and programming of art installations for museums and events with programming languages like JAVA, C and Python controlling Linux boxes, Raspberries, Arduinos and servers.

Guillermo Irigoyen, iOS Computer Systems Analyst en AT&T, CTO and Co-Founder at Darc Data. Guillermo has 8 years of experience in software development, started as a multimedia artist, focussing in the building and programming of art installations for museums and events with programming languages like JAVA, C and Python controlling Linux boxes, Raspberries, Arduinos and servers.

Expert’s comment

Alex Willson QA Manager at Ubertesters @alexwilsonubertesters What's the best way to make machine learning code/scripts testing for iOS and Android? To reply to this question, I’ll divide my overview to 3 main short points.

Alex Willson QA Manager at Ubertesters @alexwilsonubertesters What's the best way to make machine learning code/scripts testing for iOS and Android? To reply to this question, I’ll divide my overview to 3 main short points.

What is the main problem of the machine learning software testing?

Machine learning technology has to deal with the big and uncertain amount of data.We are facing the problem when ML model doesn’t have a right or wrong result (“0” or “1”) as in the traditional software. Not to fail during the testing sessions we should keep in mind the ML scenarios and permanently ask ourselves:

- Did we create testing tasks that correlate with the ML scenario?

- What ML Toolbox should we choose?

There are many sources of errors for machine learning.

The main ones are:

- Some important fraction of your data is bad or mislabeled.

- You have too much/little data.

- You chose an appropriate algorithm for your objective.

- There are errors in the implementation., etc

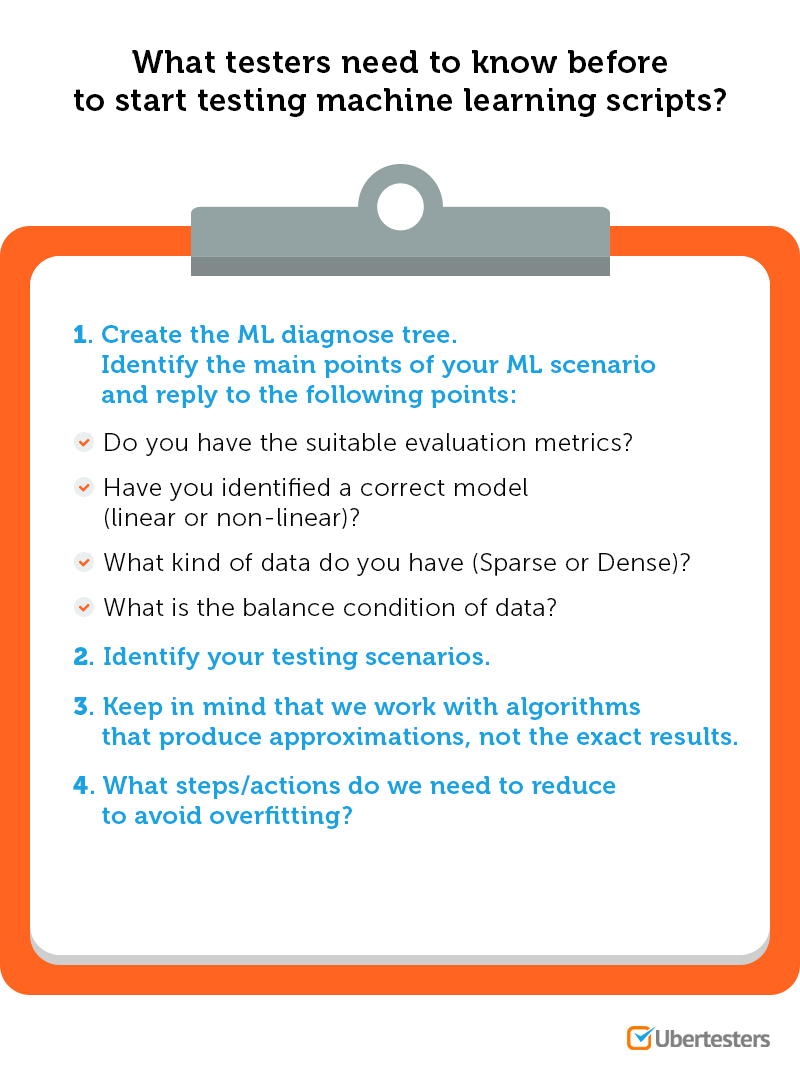

What testers need to know before start testing machine learning scripts?

- Create the ML diagnose tree. Identify the main points of your ML scenario and reply to the following points:

- Do you have suitable evaluation metrics?

- Have you identified a correct model (linear or non-linear)?

- What type of data do you have (Sparse or Dense)?

- What is the balance condition of data?

- Identify your testing scenarios.

- Keep in mind that we work with algorithms that produce approximations, not the exact results.

- What steps/actions do we need to reduce to avoid overfitting?

- Evaluating ML algorithms is a must! We should understand the way of how to evaluate machine learning algorithms and what we can expect at the end of the process.

Machine learning testing tips

- Create the objective acceptance criteria. Try to specify a number of errors that your users are willing to accept.

- Your ML architecture is part of the testing process. You should understand how the network construction will help your QA team determine the critical point to get better results.

- Test scripts with new data. Test with new data. Once you’ve trained the network and frozen the architecture and coefficients, use fresh inputs and outputs to verify its accuracy.

- Don’t consider all the results to be accurate.

- There is the one right or wrong result. We are working with machine learning A/B testing.

Have a question about ML testing? Send your e-mail to Alex directly!