AI Chatbot Testing at Scale: The Power of the Crowd

Chatbots powered by AI are revolutionizing how businesses interact with their customers at a breakneck pace. According to ResearchAndMarkets, the global AI chatbot market is projected to reach $46.64 billion by 2029, expanding at a 24.53% CAGR.

From websites to messaging platforms, AI chatbots are becoming the customer front line of engagement, sales, and support. They provide faster response times, minimized workflows, and enhanced user experiences. Whether it is a simple virtual assistant or a sophisticated AI agent, the bots are replacing the traditional human teams that previously performed tasks.

But here’s the twist — simply putting out a chatbot is not sufficient. To actually get these bots to perform, chatbot testing must be rigorous, ongoing, and realistic. Traditional QA falls short here. Why? Because AI chatbot QA is not just about making sure the code executes — it’s about how well the bot can talk to actual humans in real-world environments.

Why AI Chatbot Testing is Uniquely Critical (and Complex)

Testing a chatbot isn’t like testing a static app feature. These bots deal with open-ended language, emotions, and high-stakes business functions.

High User Expectations

Users expect chatbots to be fast, smart, and helpful, as good as a human being. If the bot does not understand them or gives a generic reply, they will leave.

Chatbot usability testing has to ensure that any user query, no matter how vague or specific, will lead to a positive experience. There is little tolerance for error, especially in industries like money or healthcare.

Risks of Failure

The consequences of poor AI chatbot testing go far beyond just one bad conversation. A failing bot can:

- Frustrate customers, leading to churn

- Damage your brand reputation

- Result in lost conversions or revenue

- Introduce security vulnerabilities through weak integrations or poor logic

Quality chatbot assurance isn’t merely a nice-to-have — it’s a business-imperative necessity.

NLP Nuances: Intent, Slang, Typos, and More

This is where the challenge arises. Natural Language Processing chatbot testing (i.e. NLP chatbot testing) must consider how real humans communicate. That is, typos, slang terms, sarcasm, multiple intent per message, and regional dialect variances. It’s not just about what the user said — it’s about what they meant. That’s why conversational AI testing is significantly more difficult than run-of-the-mill QA.

Integration Points: Where Things Often Break

Most chatbots are integrated with CRMs, payment gateways, calendars, or other software. Each integration is a point of failure. Functional testing of chatbots should ensure these integrations are working smoothly in production. And this is where typical internal testing often breaks down.

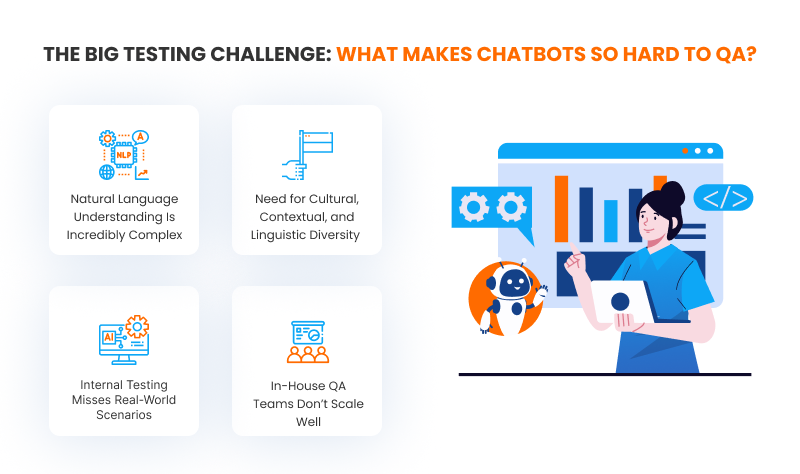

The Big Testing Challenge: What Makes Chatbots So Hard to QA?

Even experienced QA teams struggle with AI chatbot QA. That’s because bots are dynamic, unpredictable, and rely heavily on context.

Natural Language Understanding Is Incredibly Complex

Language is not as formalized as code with rules. A single question may be posed 50 ways. Trying out AI chatbots with actual users provides some insight into whether the bot actually gets user intent, or if it’s merely pretending by matching keyword input.

Need for Cultural, Contextual, and Linguistic Diversity

A bot that works well in English might stumble in Spanish. One who understands U.S. slang might fail with U.K. users. That’s why multilingual chatbot testing and diverse input scenarios are essential for building bots that can serve global audiences.

Internal Testing Misses Real-World Scenarios

In-house teams often simulate user behavior, but they can’t replicate real frustration, unexpected questions, or edge cases. Real-world users bring unpredictable interactions that expose hidden flaws. That’s what makes chatbot user experience testing so vital.

In-House QA Teams Don’t Scale Well

Bots might serve millions of users, but internal QA teams can’t simulate that level of usage. For scalable chatbot testing, you need distributed, on-demand resources — not just a handful of testers in a lab.

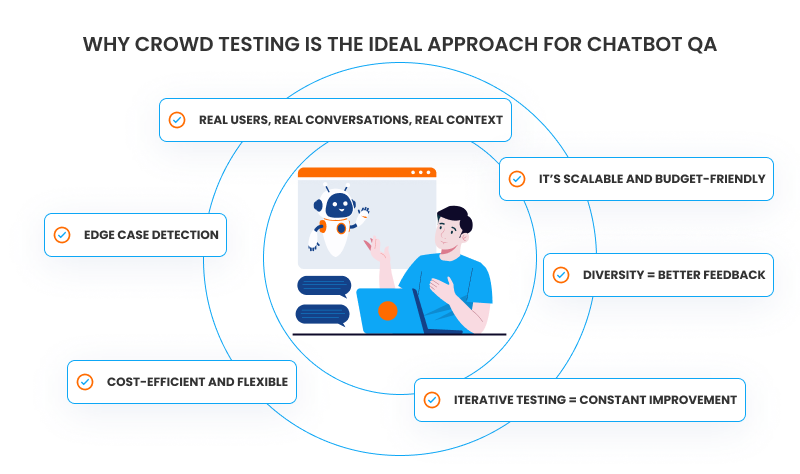

Why Crowd Testing Is the Ideal Approach for Chatbot QA

This is where crowd testing of chatbots is a game-changer. By leveraging a diverse set of real users from across the globe, it provides unparalleled scale and realism.

Real Users, Real Conversations, Real Context

Crowd testers are not like users — they are users. They talk like people would, behave like people would, and respond like people would. This leads to more accurate insights and reveals issues you’d never catch in a controlled lab setting.

It’s Scalable and Budget-Friendly

You gain immediate access to thousands of testers without having to train or recruit anyone internally. That’s why crowdsourced QA testing of chatbots is becoming the new norm for fast tech teams.

Diversity = Better Feedback

Testers from around the globe translate to a multitude of languages, accents, cultures, and expectations. This diversity is crucial for usability testing of chatbots, particularly while getting bots ready for global audiences.

Iterative Testing = Constant Improvement

Crowd testing allows the test, fix, and re-test loops to be cycled through repeatedly, creating improved performance over time. That’s the magic behind reducing chatbot errors and maximizing long-term ROI.

Edge Case Detection

Strange queries, broken logic paths, weird user journeys — real users will find them. Chatbot performance testing with the crowd often uncovers unexpected behaviors missed in pre-launch QA.

Cost-Efficient and Flexible

Crowd testing is ideal for agile teams with pay-as-you-go and 24/7 access models. Crowd testing aligns with modern development cycles and stays in tune with changing chatbot strategies.

What Crowd Testing Actually Covers in Chatbot QA

You might wonder what parts of a chatbot actually get tested by the crowd. The answer: pretty much everything that matters.

Conversation Flow and Logic

Does the bot hold the user’s interest? Does it ask meaningful follow-up questions? Can it keep a conversation going? Chatbot testing businesses go through these flows on various devices and channels to verify consistency.

Response Relevance & Appropriateness

Users judge a bot by its responses. Chatbot testing strategy needs to include tests for relevance, clarity, and tone. This is where chatbot quality assurance proves itself.

Language and Tone Consistency

If your brand is casual or corporate, the bot has to reflect that tone. Conversational AI testing checks for tone inconsistencies and stilted speech that damage UX.

Multilingual Testing

Global brands need bots that speak more than English. Multilingual chatbot testing validates translations, contextual accuracy, and cultural awareness. This prevents confusion and improves global user satisfaction.

Edge Cases and Wild Scenarios

Actual users attempt to challenge bots in ways that developers do not foresee. Crowd testing captures:

- Misspelled input

- Offensive language

- Wordy rants

- Half-typed questions

One should catch these for intelligent and safe bot behavior.

User Experience and Accessibility

Can users easily navigate the chat? Are buttons and CTAs working? Is it screen-reader friendly? Chatbot user experience testing ensures the bot is usable for everyone.

Error Handling & Fallbacks

Bots must know how to fail gracefully. Crowd testers evaluate error handling and fallback logic under pressure. The goal? Don’t let a user hit a dead end.

Platform-Specific Behavior

Regardless of where your bot is deployed on Messenger, Slack, WhatsApp, or the web, each channel is going to behave differently. For failure prevention specific to the platform, functional testing for chatbots has to support each deployment channel.

Best Practices for Effective Chatbot Crowd Testing

To get the most from AI chatbot QA, you need more than just a crowd — you need a plan.

Start with Clear Goals and Test Cases

What do you want to learn from the test? Define use cases, KPIs, and user flows before release. It helps you evaluate success and sieve valuable feedback.

Create Personas That Reflect Your Real Users

Your bot can be accessed by college students, retirees, or enterprise users. Make your testers stick to those profiles. This makes your chatbot testing approach more accurate.

Choose the Right Crowd Testing Platform

Not every platform is created equal. Look for those with specific expertise in chatbot test services with multilingual capabilities and quick turnaround. Having experience in AI agent testing is also a plus.

Define What Success Looks Like

Fewer fallbacks? Higher task completion? Improved satisfaction scores? Create clear metrics for your chatbot QA at scale so you can track progress over time.

Feedback Loop: Make It Continuous

Testing is not a one-and-done. You need a loop of feedback where you can have developers reacting quickly to tester input. That’s how you achieve ongoing improvement through the application of agile cycles.

Monitor, Iterate, Repeat

After rollout, keep testing. Bots need updating, and user requirements shift. Regular AI chatbot testing keeps your bot in optimal condition.

Wrapping It All Up

AI chatbots are not tools; they are the face of your company. A buggy or boring bot will cost you customers, revenue, and reputation. With crowd testing of chatbots, you can release smart, scalable, and trustworthy bots. You get feedback from real users in real time, which allows you to iterate on dialogue, bug squash, and build trust at scale.

In a competitive market, proper chatbot QA at scale gives you the edge. It’s how leading companies turn good bots into great experiences. If you’re still relying on internal teams alone, it’s time to rethink your chatbot testing approach. Because nowadays, bots don’t just need to work — they must dazzle.

Let’s make your chatbot unforgettable. Talk to our team today.